The Competency-based Evaluation of Educational Crew of Dental Faculty’s Obstacles in Institutionalizing Performance Assessments

Abstract

Aims:

This study evaluates the educational crew of dental faculty’s lived experiences facing obstacles and requirements in institutionalizing performance assessments to implement a professional competency-based evaluation system.

Background:

The competency-based evaluation of learning and teaching processes has been adopted as a key policy in the developed world, which indicates the achievement rate of educational goals and the quality of education.

Objective:

The main objective of this study was to evaluate obstacles in institutionalizing performance assessments for the educational crew.

Methods:

This qualitative study used a semi-structured interview in a focus group discussion. The experience of the educational crew regarding the obstacles of using performance assessments and their approaches to conducting a professional competency-based evaluation was assessed. The recruited participants were educational supervisors, professors of orthodontics and prosthodontics, and the medical education department and evaluation committee members of the faculty of dentistry at the University of Tabriz. The purposive sampling technique was used and continued until reaching saturation. Five focus group discussions were conducted with fourteen educational crew and three medical education department members. The data were analysed using thematic analysis.

Results:

The interview analysis results yielded 450 codes in three general categories, including “current condition of clinical education,” “obstacles of implementing new evaluation methods,” and “requirements for effective evaluation of clinical skills.” According to the results, changes in evaluation methods are necessary to respond to community needs. There are also many cultural problems with applying western models in developing countries.

Conclusion:

The medical community should be directed towards a competency-based curriculum, especially in procedure-based fields, such as dentistry.

Other:

They are moving towards altering traditional evaluation methods (the traditional classroom-based lectures). This paradigm change requires support from the department and the provision of infrastructure.

1. INTRODUCTION

The general curriculum for faculties of dentistry in Iran was accepted in June 17, 1988 [1]. The basic alterations in 1999 involved the introduction of courses in community-based education, primary dental health care, and comprehensive care [2]. These changes were in line with the integration of medical education and health care services in the country [3]. Similar modifications were also conducted in the medical education curriculum [4]. In the present dentistry curriculum, the courses are in three groups: general, basic, and specialized. The courses are categorized into thematic units, and each subject is individually planned. Then, two main points of the current curriculum of dentistry in Iran are: a) the parting between theoretical and practical courses and one (practical/clinical) being founded on another (theoretical); b) the parting between theoretical and practical/clinical training according to the branches of science [1].

Assessing the clinical performance of trainees in the clinical workplace is considered one of the main concerns of clinical educators. On the one hand, accurate assessment of trainee performance is among the requirements to ensure physician’s performance [5]. Thus, one of the response items of the medical community is to obtain an assurance of trainee professional competence, which is possible through designing a standard competency-based evaluation. In the fields of medicine, biography skills, physical examination, communication skills with the patient, clinical judgment, professionalism, and effective clinical care are among the professional abilities that should be evaluated [6, 7]. Due to Miller’s pyramid, competencies are evaluated by means of performance-based assessments such as Portfolio, Objective Structured Clinical Examination (OSCE), Direct Observation of Procedural Skills (DOPs), and Mini Clinical Evaluation Exercise (Mini-CEX) [8-10]. Performance-based assessments are the only type of assessment that can evaluate clinical skills [11, 12], trainee behavior in dealing with the patient, communication skills, and views towards treatment and diagnosis in a real-life consultation when dealing with real patients [13, 14], and provide feedback after observing their performance [15]. In the field of dentistry, patients’ fear and anxiety about dentistry services are very prevalent. Therefore, in addition to diagnostic and therapeutic skills, the trainee’s command of communication skills and management of the patient’s fear and anxiety are one of the fundamental competencies that the trainee must acquire [11, 16]. These skills can also be evaluated by means of performance assessments [17, 18]. In most of the studies, trainees consider performance assessment a positive experience in the evaluation process and state that receiving feedback is the most important component that leads to their learning [19, 20]. In the field of dentistry, in various studies conducted on the application of performance assessment methods, performance assessment is considered an effective method in evaluating the clinical performance of trainees [7]. For example, in the pilot study conducted by Samer Kasabah in Saudi Arabia, the use of the Mini-CEX assessment method is considered an effective evaluation method by dentistry professors and students, and students consider its most important strength to be immediate feedback regarding their weaknesses and receiving constructive feedback for improving their performance [21]. In the study by Ramrao Rathod, the Mini-CEX evaluation method was also considered better than other traditional evaluation methods from the viewpoint of graduate dentistry students, which helps the relationship between professor and student and the student and patient, while also improving trainees’ clinical skills and analyses [13]. Considering the significance of performance assessments and their role in learning and evaluation, it has been stated in various studies that performance evaluation methods are either not completely implemented, or faculty members are not willing to implement them. For example, in the study of Behere in India [17], from the viewpoint of dentistry students, professors do not usually provide feedback on their performance and don’t guide them towards improving their performance. In addition, in this study, dentistry professors consider one of the most important reasons for not implementing these assessments to be the fact that they are time consuming. In studies conducted in Iran, this evaluation method is considered effective, while it has some deficiencies such as disagreement among assessors and inability to provide feedback to the trainees [22]. Regardless of the significance of performance assessments in approving professional competency [23, 24] of the trainees, much of the clinical skills assessment of dentistry students has been based on the faculty members overall perception of the students’ performance or assessment of their mental abilities [25]. Based on a review of the literature up to 2012, there have been no studies recorded on using new evaluation methods in Iran in the field of dentistry [26]. Therefore, identifying barriers in implementing them in the context of dental education in Iran is necessary to enhance performance assessment to fit the context of Iran’s academic community. Hence, this study aims to evaluate the lived experiences of educational crew in lived experiences of obstacles and requirements of institutionalizing performance assessments to implement professional competency-based evaluation through a qualitative study.

2. MATERIALS AND METHODS

2.1. Methodology

2.1.1. Study Participants

The current research is a qualitative study, including a study population of assessment experts consisting of educational supervisors and faculty members of orthodontics, prosthodontics and medical education faculties and the evaluation committee members of the dentistry faculty. Fourteen faculty members from the orthodontics and prosthodontics department and three faculty members from the medical education department participated in the focus group discussion interviews. The social/personal information of the participants is provided in Table 1.

| Participants | Gender | Age | Work Experience | Scientific Ranking |

|---|---|---|---|---|

| Orthodontics | Male=5 Female=3 |

30-40=2 40-50=3 50-60=3 |

5-15=3 15-25=3 25-35=2 |

Assistant Professor=5 Associate professor=3 |

| Dental Prostheses | Male=3 Female=3 |

30-40=3 40-50=2 50-60=1 |

5-15=3 15-25=2 25-35=1 |

Assistant professor=3 Associate professor=3 |

| Medical Education | Male=2 Female=1 |

40-45=2 45-60=1 |

15-25=2 25-35=1 |

Assistant professor=2 Professor=1 |

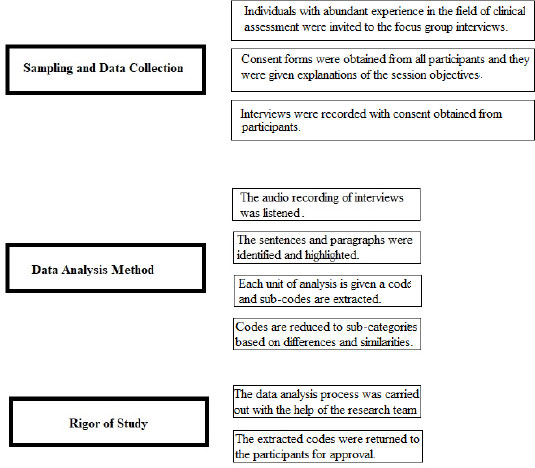

2.1.2. Sampling and Data Collection

The purposive sampling method was used to select the participants of this study and data was collected through focus group discussions (FGD) using semi-structured interviews with faculty members of the orthodontics and prosthodontics departments, medical education faculty and the assessment committee members of the Faculty of dentistry (who are faculty members). Their areas of activity include; evaluation of educational programs and training courses, evaluation of professors and teachers, internal and external evaluation of educational groups in the faculty of Dentistry.

Individuals with abundant experience in the field of clinical assessment were invited to the focus group interviews. The participants themselves agreed upon the time and location of FGD sessions so that faculty members could participate in these sessions without any distractions. Prior to starting sessions, an interview guideline was provided, including a number of open-ended questions. Among the questions were: 1) Please state your experience regarding the current clinical skills assessment condition in the orthodontic and restorative dentistry department. 2) According to the multiple years of experience you have acquired, what are the most important obstacles in using new evaluation methods for clinical skills in the field of dentistry?

Prior to the beginning of each session, consent forms were obtained from all participants and they were given explanations of the session objectives and notified that the information provided would remain anonymous. Participants were informed that their participation is voluntary, and they may leave the session whenever they feel necessary. Interviews were recorded with consent obtained from participants. After the end of each session, interviews were transcribed word for word and listened to numerous times to obtain data immersion. Data analysis was conducted concurrently with data collection and the results of the analyses for each session were used as a guideline for the following sessions. The interview sessions continued until reaching saturation, and FGDs reached saturation after 5 sessions (based on the previous studies).

2.1.3. Data Analysis Method

Data analysis was conducted using the common content analysis method concurrently with data collection. That is, the interviews were initially transcribed and the audio recording of the interviews was listened to numerous times to better understand the content.

Then the first phase of the coding process began with an emphasis on the explicit and implicit content by means of identifying and highlighting the sentences and paragraphs of the unit of analysis. Each unit of analysis is given a code and sub-codes are extracted. Then, the codes are reduced to subcategories and categories based on differences and similarities. Codes were continuously controlled and in the event of any contradiction, this incongruity would be resolved through discussions. Then, an attempt was made to obtain an overall picture of the topic at hand through creating a relationship between the categories [27].

2.1.4. Rigor of Study

To determine the validity of the data in the study, continuous evaluation of the data and concurrent data analysis was used. After collecting the data and reviewing the codes extracted by participants, the data analysis process was carried out with the help of the research team (N, B), and the data was continuously controlled and analyzed. The extracted codes were returned to the participants for approval to certify an accurate understanding of the participants’ viewpoints. Details of the research were accurately documented and phases of the research were explained from the beginning to end in detail so that the external observer could conduct an evaluation. In addition, an attempt was made to use appropriate and extensive samples considering individual characteristics such as age, work experience, and scientific ranking to have access to data diversity. (Fig. 1) shows the research paradigm.

3. RESULTS

14 faculty members from the orthodontics and prosthodontics department and three faculty members from the medical education department participated in the focus group discussion interviews.

450 codes were obtained from analyses of the interviews in three overall categories; “current condition of clinical education’, “obstacles in implementing new evaluation methods”, approaches to improving the performance assessment of dentistry students”.

In the realm of “current conditions of clinical education” two categories of “phase of accepting the necessity for creating changes in clinical skill evaluation methods by the educational crew”, and “beginning the transition stage from traditional clinical evaluation methods (the traditional classroom-based lectures) to new evaluation methods” were obtained (Table 2).

| Category | Sub-category | Open code? |

|---|---|---|

| Current situation of clinical skills evaluation in the orthodontic prosthesis department | Admission stage: necessity of creating changes in clinical skills evaluation methods by educational crew | • Informality between clinical evaluations and ideal conditions • Subjectivity of teaching and clinical evaluation • Evaluating clinical skills for clinical assessment • Inadequate standards for assessments • Necessity of changing evaluations of clinical skills |

| Beginning of the transfer from traditional clinical skills evaluation methods to innovative evaluation methods | • Spontaneous use of the DOPs method for practical skills • Moving towards improved quality by holding integrated assessments (practical + theoretical) • The need to focus on procedural assessments |

The most representative in terms of repetition viewpoints were as follows;

Viewpoints of the educational crew in the category “phase of accepting the necessity for creating changes in clinical skills evaluation methods by the educational crew”

Participant Number 2 stated that: “Training and evaluation are done subjectively and is not consistent to a certain extent. As a result, it seems that both the grade and evaluation quality are subjective, do not have adequate reliability, and depend on the professor’s opinion.”

Participant Number 5 stated that: “Quality of assessment is average in the current state and is a long way from reaching an ideal state. Sometimes it has not been clear whether all students have acquired all the necessary skills or not? The tools and skills that should be acquired by a general dentistry student need to be specified, actually, there is no rule of thumb and it needs to pass this stage.”

Viewpoints of the educational crew in the category “beginning the transition stage from traditional clinical evaluation methods to new evaluation methods”

| Category | Sub-category | Open Code |

|---|---|---|

| Obstacles in effective clinical skills evaluation in the field of dentistry | Essence of clinical skills evaluation | • Variety of clinical skills in the department • Difficulty with categorizing skills and adequate scoring • High costs • Numerous practical stages and required skills • Presence of the patient is required in evaluating some skills • High stress rate of these assessments for the student |

| Faculty members lack of awareness regarding innovative methods of evaluation | • Lack of knowledge and awareness or experience regarding similar cases in other fields and universities • Faculty member unfamiliarity with all evaluation methods |

|

| Faculty members resistance towards using innovative evaluation methods | • Unnecessary emphasis on written exams for clinical assessment • High workload • Conducting laboratory duties along with clinical duties • Some faculty members do not use checklists and logbooks • Lack of coordination among faculty members of one department |

|

| Poor supervision of clinical evaluation | • Inadequate training and evaluation of faculty members • No guidelines for scoring each skill • No unified procedure in training and evaluation • Conducting subjective evaluations of students performance • Lack of emphasis on fundamental educational priorities in skills evaluation |

|

| Poor infrastructures for implementing innovative assessments | • High ratio of students to professor in the department • Insufficient time for practice or innovative assessments • Limited number of sessions compared to number of students • Lack of evaluation briefings in the department • Lack of pre-determined program • Lack of sufficient independence for department supervisors • Insufficient time |

|

| Poor system of feedback for faculty members performance evaluation | • Lack of the feedback from the university to active faculty members • Indifferent reaction of university towards faculty members • Having an outlook of breaking traditions if faculty members perform differently |

|

| Poor physical facilities | • Lack of space and facilities • Low number of patients due to long admission process for patients • Lack of simulation-patients due to high costs |

Participant Number 11 stated that: “The DOPs method is used for the evaluation of practical skills in the prosthesis unit to a certain extent; however, it is done in a traditional manner. In a traditional manner, the standard checklists have not been designed and some of the professors implement their own creativity in evaluating some skills using DOPs.”

Participant Number 9 stated that: “In the removable prosthesis unit, an evaluation of skills is conducted by the professor during different phases of the task and the student is given feedback. Moreover, the student’s final grade is calculated with reference to the logbook at the end of the term and the Mini-CEX method is actually being routinely carried out, and of course, it requires greater organization”.

Six categories are identified in the realm of “obstacles in implementing new evaluation methods”; “the essence of clinical skills evaluation”, “faculty members lack of awareness regarding the implementation of new evaluation methods”, “faculty members resistance in making use of new evaluation methods”, “poor supervision of clinical evaluation”, “inadequate infrastructures for implementing new assessments”, and “inadequate system of feedback for faculty members evaluation performance” (Table 3).

Viewpoints of educational crew in the category “essence of clinical skills evaluation”

Participant number 10 stated that: “Each skill has numerous stages and designing a checklist based on each skill is very time-consuming. On the other hand, there are many skills and such an assessment cannot be designed for all skills.”

Participant number 8 stated that: “To evaluate some of the skills, the presence of the patient or a simulated patient is necessary, and finding a patient with the scenario in mind can be quite difficult. Moreover, if we want to use a simulated patient, training requires great costs and we are often not given adequate financial support to do so.”

Viewpoints of educational crew in the category “faculty member’s lack of awareness regarding the implementation of new evaluation methods”

Participant number 9 stated that: “Less research is conducted in the field of educational issues… and our knowledge regarding the experiences of different fields and other universities regarding the design of these assessments is not sufficient.”

Participant number 2 stated that: “Faculty members are not acquainted with all evaluation methods, in fact, they follow in the footsteps of their own professors and have no tendency to enhance their methods or even use other methods.”

Viewpoints of the educational crew in the category “faculty members’ resistance towards making use of new evaluation methods”

Participant number 12 stated that: “faculty members are resistant towards new methods of evaluation and faculty members that use innovative methods are considered to be breaking the tradition and are prohibited from promoting these methods.”

Participant number 3 stated that: “With the integration of treatment and education, the faculty member’s workload is very high and they spend less time on education and evaluation.”

Viewpoints of the educational crew in the category “weak supervision of clinical evaluation.”

Participant number 3 stated that: “Educational supervisors have fallen short in their supervision and when faculty members notice that they do not have to be responsive for their performance, each will perform subjectively.”

Participant number 12 stated that: “Until now, the university has not developed any regulations for how to conduct skills evaluation and to create a unified procedure of evaluation. Therefore, the subjective performance of the faculty members is quite natural.”

Viewpoints of the educational crew in the category “Inadequate infrastructure for implementing innovative evaluation.”

Participant number 10 stated that: “There is a great number of students and the number of sessions for each section is limited… evaluating this number of students using DOPs during this limited duration of time is not possible.”

Viewpoints of the educational crew in the category “inadequate system of feedback for faculty member’s performance evaluation”

Participant number 8 stated that: “The University’s response towards all faculty members is somewhat the same and the system for providing incentives is not very efficient. Is it too much to ask for at least a written letter of encouragement for adding creativity to the program?”

Viewpoints of the educational crew in the category “Poor physical facilities.”

Participant number 8 stated that: “the test hall is very small and does not have adequate facilities to conduct clinical assessments. We are sometimes faced with a lack of supplies to carry out certain treatment procedures.”

In the realm of “Necessity for implementing innovative evaluation methods”, five categories including: “providing physical facilities”, “making use of efficient workforce”, “planning and organizing for implementation of innovative evaluation methods”, “structural changes for implementing innovative evaluation methods” and “preparing infrastructures to implement innovative evaluation method” were obtained (Table 4).

| Category | Sub-Category | Open Code |

|---|---|---|

| Necessity for effective evaluation of clinical skills | Providing physical facilities | • Providing necessary facilities such as a standard test hall for clinical assessment • Providing fundamental facilities to conduct clinical duties |

| Making use of an efficient workforce | • Recruiting faculty members with adequate capabilities • Standardizing the number of faculty members |

|

| Planning and organizing to implement innovative evaluation methods | • Acquainting faculty members with evaluation methods • Holding one-hour courses on a continuous basis, instead of daily courses • Determining necessary learning skills • Sufficient time for evaluating each skill • Allocating more time for the examiner |

|

| Structural changes for implementing innovative evaluation methods | • Scoring based on behavior and encounter with the patient, maintaining health and safety of the patient • Standardizing the number of students • Expanding the evaluation center in order to coordinate evaluation activities • Determining and implementing policies of the evaluation center Distribution of patients among students at the beginning of the term |

|

| Preparing infrastructures for implementing innovative evaluation methods | • Designing a unified checklist in order to standardize clinical evaluations • Developing a unified guideline for implementing clinical skills evaluation • Developing common criteria for scoring • Using a checklist and logbook to record clinical evaluation immediately after each training session • Better coordination among faculty members teaching each practical course • Obligation of faculty members to qualitatively and quantitatively use practical training sessions throughout the term |

Viewpoints of the educational crew in the category “providing physical facilities”

Participant number 10 stated that: “… required facilities are not provided to work efficiently; for example, infection control requires more facilities such as turbines or an individual hand pass for each patient.”

Viewpoints of the educational crew in the category “making use of an efficient workforce”

Participant number 4 stated that: “faculty member recruitment necessitates filtering so that faculty members with high capabilities are recruited, preferably graduates of Type 1 universities.”

Viewpoints of the educational crew in the category “planning and organizing to implement innovative evaluation methods”

Participant number 5 stated that: “Empowerment courses are a necessity for faculty members, preferably during a short duration of time so that we can participate in workshops in between our duties, while still being continuous.”

Participant number 1 stated that: “evaluation method workshops should be held so that faculty members can become familiar with how to design checklists, scenarios, implement and score them. Some faculty members are not familiar with designing scenarios and think that if they place a cliché in one station, conduct a performance assessment which is actually similar to multiple choice tests, it is only station to station.”

Viewpoints of the educational crew in the category “structural changes to implement innovative evaluation methods”

Participant number 6 stated that: “the grade must be based on the type of behavior and encounter with the patient, maintaining the health and safety of the patient, and proficiency in educational duties. It is done more subjectively.”

Participant number 13 stated that: “limitation of the number of students to perform and accurately evaluate all skills for each student during the time given. However, this decision is made by the Ministry of Health and the university can declare its opinion to the Ministry.”

Viewpoints of educational crew in the category “preparing infrastructures to implement innovative evaluation methods”

Participant number 3: To conduct a standard and fair evaluation among students, the evaluation should be done based on a specified checklist because students take courses with different professors.

Participant Number 7: “the department requires a unified guideline for the above objective and this has not been done thus far.”

4. DISCUSSION

This study aimed to evaluate the obstacles and requirements for implementing performance assessments in the evaluation of General Dentistry students based on the lived experiences of faculty members at Tabriz University of Medical Sciences. According to the analyses of interviews related to current conditions, two categories of “acceptance phase indicating the necessity to create changes” and “the beginning of the transition phase from traditional methods of clinical skills evaluation to innovative evaluation methods” were obtained. In fact, the deficiencies in clinical skills evaluation and incompetence in obtaining required skills by faculty members and students have been acknowledged. The results of some studies also indicate the lack of students’ efficient skills and inadequate evaluation of trainees, whereas in the study by Sodagar et al. in the field of dentistry, trainees had not obtained the necessary competencies in conducting some basic skills such as the ability to present the treatment plan and controlling children’s behavior, and this condition of clinical skills indicates poor training and evaluation of their basic skills [28].

In studies conducted on the quality of clinical skills evaluation of trainees, dentistry students have also emphasized the necessity for expanding procedural skill assessments to enhance the evaluation/training aspect [29].

In the study by Singh, using these assessments was considered effective in increasing performance skills and implementing such assessments was required from the first clinical encounters [29]. Making use of innovative clinical assessments such as Mini-CEX and DOPs are somewhat reassuring for faculty members to ascertain the skills acquisition required by their trainees [21]. Therefore, this study indicated the acceptance of changes in innovative evaluation methods from both student and faculty members and the preparedness to accept new methods of evaluation. This is while some studies carried out in Iran suggest the beginning of a movement toward changes in evaluation procedures from subjective-based evaluations by the faculty members to standard performance assessments. In the study conducted by Kouhpayehzadeh et al. in three universities of Tehran, Iran, and Shahid Beheshti Universities of Medical Sciences, after multiple-choice assessments in these universities, OSCE, logbook, portfolio and 360 degrees were used for evaluating performance skills [30]. In various other studies conducted in developed countries, making use of performance assessment methods are considered beneficial [17]. In addition, in the study by Lohe et al., a meaningful correlation was observed in trainees’ scores from the first encounter to the fourth encounter by using the Mini-Cex assessment, and trainees’ scores increased after four encounters [31]. Students considered this method to be valuable for evaluating clinical competencies at the level of General Dentistry that can prepare them for the specialization [31]. Considering the obstacles in using innovative evaluation methods, six categories were obtained: “the complex and time-taking essence of clinical skills evaluation”, “faculty members unawareness regarding the implementation of innovative evaluation methods”, “resistance of faculty members in using innovation evaluation methods”, “poor supervision of clinical assessments”, “poor infrastructures for implementing innovative assessments”, “poor feedback system for faculty members performance evaluation.” Most faculty members acknowledged that innovative evaluation methods are essentially time-taking and difficult to design, and this feature results in their refraining from the design and use of such assessments [22, 32]. In the study by Faryab and Sinai, the most important issue related to the structure of performance assessments was having an experienced team of examinees that should be familiar with these methods and can perform proficiently in designing checklists, without disagreements among examinees. The stressful essence of these assessments is, among other features, whereas they are conducted under the supervision of the professor and result in high stress and anxiety for the student [33]. Therefore, most professors suggest that these assessments are used as procedural assessments so that they can have more educational aspects and provide feedback in order to resolve weaknesses [15]. This is while in various studies, students consider the advantage of these assessments to be the feedback and improving their weaknesses [16, 34].

Another obstacle in making use of innovative evaluation methods is the lack of clinical supervision. In the current educational system, the evaluation of faculty members has more of a quantitative aspect and is mostly based on evaluation forms designed based on the evaluation system and their performance is not evaluated in the field. The system of promotion is mostly research-based and is conducted according to research performance and the number of publications, while teaching and training have the lowest score in the promotion process. Thus, faculty members spend most of their time and energy on research [35]. As a result, to change professors’ viewpoints, supervision should be enhanced so that they will become interested in using these assessments. Empowering faculty members and training expert examinees is the next stage that the university may take to facilitate the use of innovative evaluation methods. However, empowerment courses should be provided based on needs analysis. This is while faculty members, having taken empowerment courses, still felt their real needs regarding the design and implementation of the assessment have not been resolved. Therefore, faculty member empowerment facilities should be provided regarding the design and implementation of assessments based on needs in the field of dentistry.

5. STUDY LIMITATIONS

In this study, only two groups of orthodontics and prosthodontics departments were selected. This study can be complemented by performing the interviews to a larger group of participants from all of the education departments. The other limitation of this study was the business of the clinical professors. The sessions were changed frequently so that the clinical professors could attend the sessions. The opinion of students and recent graduates can also be complementary to this study. Besides, it was better the questionnaire used in this study have consisted of the object-oriented recommendations of the participants.

CONCLUSION

Changes in evaluation methods are one of the necessities for responding to the community’s needs. The medical community should be directed towards a competency-based curriculum, especially in fields like dentistry that are procedure-based. Assurance of acquired skills is among faculty members’ concerns; at other levels, are the university and people. Using performance-based assessments instead of multiple-choice assessments can be a step forward in training skillful students with the professional competencies necessary for providing services to the community. It becomes evident that there are many cultural problems with applying western models in developing countries. Thus, it is suggested that the models may be compatible with the local culture of the country.

LIST OF ABBREVIATIONS

| OSCE | = The Objective Structured Clinical Examination |

| DOPs | = Direct Observation of Procedural Skills |

| Mini-CEX | = Mini Clinical Evaluation Exercise |

| FGD | = Focus Group Discussions |

AUTHORS’ CONTRIBUTIONS

RN, SF, and NB were involved in this study for designing and drafting the research and the manuscript. NB was responsible for coordinating the study. RN and SF interviewed the participants. RN, SF, and NB commented on the coding process. All authors have read and approved the final version of the manuscript.

ETHICALS APPROVAL AND CONSENT TO PARTICIPATE

This study was approved by the Research Ethics Committee of Tabriz University of Medical Sciences (IR.tbzmed.reec.1397.253).

HUMAN AND ANIMAL RIGHTS

No animals were used for studies that are the basis of this research. All the humans used were in accordance with the Research Ethics Committee of Tabriz University of Medical Sciences and with the Helsinki Declaration of 1975

CONSENT FOR PUBLICATION

Before initiating the interview, an information sheet was provided to the participants to explain the purpose of the study, delineate who was provided with the results, and ensure the anonymity of information and freedom to participate or withdraw and their informed consent was secured. Each interview was recorded by a digital sound recorder after obtaining the participants’ permission, and important notes were taken.

STANDARD OF REPORTING

COREQ Guideline were followed.

AVAILABILITY OF DATA AND MATERIALS

Data are available from the authors upon reasonable request and upon the agreement of Tabriz University of Medical Education Research Center.

FUNDING

This research was supported and granted by the Medical Education Research Center of Tabriz University of Medical Sciences, Tabriz, Iran.

CONFLICT OF INTEREST

The authors declare no conflicts of interest, financial or otherwise.

ACKNOWLEDGEMENTS

The authors would like to thank Tabriz University of Medical Sciences for supporting and granting this research as a part of an MS thesis in medical education.