All published articles of this journal are available on ScienceDirect.

Artificial Intelligence and its Implications in the Management of Orofacial Diseases - A Systematic Review

Abstract

Objective

This study aimed to evaluate artificial intelligence’s integration into dental practice and its impact on clinical outcomes.

Material and Methods

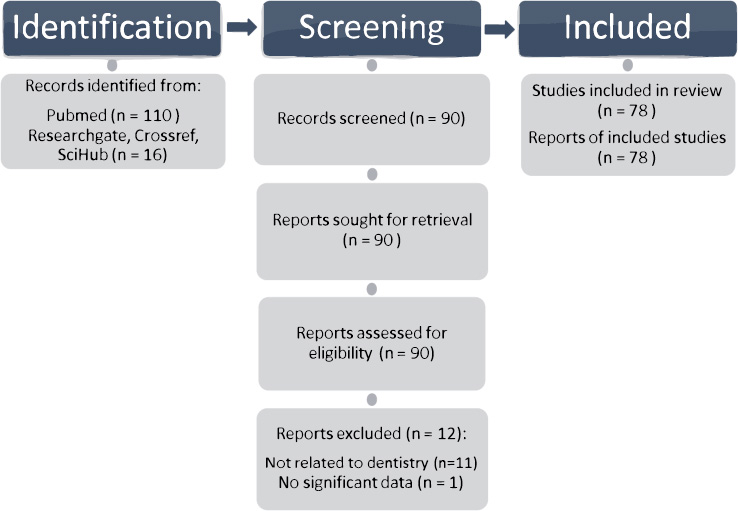

Research papers titled “Artificial Intelligence and its Implications in Dentistry” were searched on PubMed, ResearchGate, Sci-Hub, and Crossref from 2018 onwards. The selected publications were independently evaluated, reviewed for eligibility, and meticulously analyzed to meet all inclusion criteria.

Results

This mini-review of 46 studies (2018-2024) explored AI applications in dentistry, particularly machine learning and deep learning. AI was applied for diagnosis in 19 studies, treatment planning in 3 studies, and both diagnosis and treatment in 18 studies. It was used for detection in 15 studies, segmentation in 7 studies, and classification in 4 studies, with the largest sample size being 7,245 patients focused on oral cancer detection. The studies used diverse imaging modalities, underscoring AI's broad applicability in the field.

Conclusion

Artificial intelligence in dentistry holds significant promise, particularly in the realm of diagnosis. The significant patient sample sizes and diverse imaging techniques further validate AI's potential to enhance diagnostic accuracy and treatment efficiency. As AI continues to evolve, its integration into dental practice is likely to become increasingly essential, where it should complement rather than replace human clinical expertise with more research needed before it can be widely used in clinical settings.

1. INTRODUCTION

Artificial intelligence (AI) aims to enable machines to mimic intelligent human behavior, a concept that traces back to Aristotle and was further developed by Alan Turing. In August 1955, John McCarthy began advocating the term “AI”, which was formalized during workshops in 1956, establishing AI as an academic field [1].

AI is rapidly evolving, focusing more on future applications. For example, Okayama University developed “Zerobot”, a remote-controlled robot for needle insertion in computed tomography (CT)-guided interventional radiology, minimizing doctors' radiation exposure [2].

AI includes subcategories, like machine learning (ML), deep learning (DL), and robotics. ML enables automated learning without explicit programming, using current observations to forecast future occurrences [3]. DL is a recent advancement in AI systems in dentistry, using multiple neural network layers to analyze input data and make predictions from unstructured and unlabeled data [4].

AI's impact spans multiple fields, including medicine and engineering. In dentistry, AI enhances efficiency and accuracy, as well as manages routine tasks, like appointment scheduling. It can also identify malocclusions, detect maxillofacial abnormalities, and classify dental restorations. Radiography is the most popular diagnostic use [5]. In endodontics, AI accurately detects periapical pathologies and predicts disease outcomes [6].

Over the past 20 years, AI applications in dentistry have grown. One of the earliest was ML, aiming to build systems that learn and function without explicit human direction. Artificial neural networks (ANNs) emerged simultaneously, mimicking the human brain's neural network to help computers react appropriately to events [4, 7].

The objective of this review was to systematically evaluate and synthesize the current evidence on the applications, effectiveness, and implications of AI in the diagnosis, treatment, and management of orofacial diseases, with a focus on identifying its potential benefits, limitations, and areas for future research.

1.1. Application of AI in Disease Management

AI is widely applied in disease management to analyze treatment outcomes and enable precision medicine. These ML algorithms are robust analytical tools that aid medical professionals in evaluating patterns and trends, thereby reducing errors and improving diagnosis accuracy. In dentistry, DL applications are particularly promising, creating high-performance systems with superior decision-making by identifying configurations from vast image datasets.

AI's self-driven applications have gained interest in the pharmaceutical and medical industries, with advancements in medical image interpretation. Wearable technology can also provide early and effective therapy by anticipating life-threatening emergencies, like strokes [8].

According to the Canadian Dental Association, there are 9 acknowledged specialties in the field of dentistry, including dental public health, endodontics, oral and maxillofacial surgery, oral medicine and pathology, orthodontics and dentofacial orthopedics, pediatric dentistry, oral and maxillofacial radiology, periodontics, and prosthodontics [8]. This article further explains the role of AI in each of these subspecialties in detail.

1.2. Endodontics

1.2.1. Periapical Lesions

Diagnosing and treating teeth with periapical lesions can be challenging. Apical periodontitis, which accounts for about 75% of radiolucent jaw lesions, requires early detection to prevent disease spread and improve treatment outcomes [9]. While panoramic and intraoral periapical radiographs (IOPA) are commonly used, their reliability is limited due to the reduction of 3D anatomy into a 2D image. Cone-beam computed tomography (CBCT) imaging, a 3D technique, more accurately identifies periapical lesions' location and size, with a meta-analysis reporting accuracy values of 0.96 for CBCT, 0.73 for traditional periapical radiography, and 0.72 for digital periapical radiography. However, CBCT is less accurate for diagnosing apical periodontitis in teeth with root fillings [9].

Ruben et al. highlighted a DL model with superior diagnostic accuracy, with 0.87 sensitivity, 0.98 specificity, and 0.93 area under the curve (AUC), outperforming experienced oral radiologists [10]. Vo et al. and Maria et al. also introduced AI models with remarkable diagnostic performance, though limited by dataset size [11, 12]. These advancements highlight AI's potential to enhance diagnostic precision and efficiency in endodontics, pending further development and validation.

1.2.2. Working Length Determination

Choosing the correct working length is crucial for successful root canal treatment, reducing insufficient cleaning and shaping, and maintaining root-filling material in the canal. Xiaoyue et al. reported an AI model for measuring root canal length with exceptional accuracy, surpassing the dual frequency impedance ratio method, which had 85% accuracy. Future research can improve performance by increasing the sample size [13].

1.2.3. Vertical Root Fractures

Vertical root fractures (VRF) can occur in treated or untreated teeth and are often diagnosed late due to their subtle nature. AI models can help clinicians diagnose VRF more quickly and accurately [14]. Ziyang et al. found the ResNet50 AI model to have the best sensitivity and accuracy for diagnosing VRF [15]. Hina et al. reported an AI model detecting tooth cracks with a mean ROC of 0.97 [16].

1.2.4. Morphology of Root and Root Canal System

Evaluating the shape of tooth roots and canals is critical in endodontics, especially for complex variations, like C-shaped canals. Adithya et al. reported superior performance of Xception U-Net and residual U-Net AI technologies in categorizing C-shaped canal anatomy in mandibular second molars, though limited by small sample size and exclusive focus on C-shaped anatomy [17].

1.2.5. Prognosis of Root Canal Treatment

Chantal et al. developed an AI model to predict variables linked to root canal treatment failure, showing good performance in forecasting tooth-level factors. Yunxiang et al. found a model with 91.39% sensitivity, 95.63% AUC, and 57.96-90.20% accuracy. These automated models assist clinicians with decision-making, providing quick, accurate results without extensive clinical experience and reducing inter-observer variability [18, 19].

1.3. Orthodontics

Diagnosis in orthodontics involves cephalometric examination, facial and dental evaluation, skeletal development assessment, and inspection of upper respiratory congestion. DL and convolutional neural networks (CNNs) are effective for 3D cephalometric landmark identification, such as Web Ceph. Sameh et al. used the you only look once (YOLO) technique on intraoral images to detect malocclusions, like overjet, overbite, crossbite, crowding, and spacing, achieving 99.99% accuracy. Advances in computerized technology have led to the development of 3D intraoral scanners in dental practice [20].

AI also accurately detects skeletal age using cervical vertebral maturation and wrist X-rays, with CNN models achieving over 90% precision. The Dental Monitoring (DM) system, comprising a mobile app, web doctor, and detection algorithms, aims to reduce chair time, enable early orthodontic issue detection, and improve aligner fit [21].

1.4. Imaging

AI is considered one of the fastest-expanding tools of application in dentistry with regards to imaging and diagnosis. AI and DL could benefit medical radiography by automating data mining using the vast data from digital radiographs in clinical settings. Several studies have concluded the efficiency of AI in oral and maxillofacial radiology, in which numerous algorithms have been programmed to localize cephalometric landmarks, detect periapical disease and diseases of the periodontium, segment and classify cysts and tumors, and diagnose osteoporosis [22]. The accuracy and performance of different systems, however, have not been constant and dependent on the type of algorithm used.

1.4.1. Identifying Dental Anatomy and Detecting Caries

CNN identification software show potential in detecting anatomical structures. Usually, periapical radiographs are the training apparatus to locate and name teeth, where the CNN scans them and provides an accuracy rating of 95.8-99.45%, which is comparable to clinical professionals' accuracy score of 99.98% on detecting and classifying teeth [23]. A deep CNN algorithm detected carious lesions in 3000 posterior teeth periapical radiographs with a sensitivity of 74.5-97.1% and an accuracy of 75.5-93.3%. This is a substantial advancement over medical professionals using radiographs alone for diagnosis, with sensitivity ranging from 19 to 94% [24].

1.4.2. CBCT

With wide applications in dentistry, CBCT imaging is considered one of the most crucial diagnostic criteria in many instances to differentiate teeth and their surrounding structures. In a study conducted by Kuofeng et al. (2019) at the University of Hong Kong, China, they concluded that the number of 3D imaging modalities has grown nine times between 1995 and 2019 [25]. This being said, it is important to understand the reasoning behind its growth and popular demand in the industry.

The 3D component of CBCT helps medical professionals precisely identify diseased regions, specifically in the buccolingual aspect, which other radiological modalities, like panoramic X-rays, cannot provide. However, assessing each landmark and measuring parameters is time-consuming. Automation can resolve these issues. DL enables the entire process, from input images to final classification, without the need for human intervention. This is achieved by advanced software capable of handling vast data volumes and performing complex tasks, like predictive modeling, natural language processing, and image recognition.

Image recognition and analysis of specific diseases usually follow a course of 3 steps: pre-processing, segmentation, and post-processing. CNNs are the most frequently employed neural networks for segmenting and analyzing medical images in recent days. They contain three parameters consisting of input, output, and hidden layers, where the hidden layers are made up of several pooling, convolutional, and fully connected layers [26].

Hierarchical features are extracted and learned via convolutional filters, while the pooling layer connects nearby pixels to the average of all features that have been obtained [27]. One of the most significant CNN frameworks is U-Net, which is also employed in image segmentation. This can help reduce the discrepancy of multiple factors, like the ability of new and experienced clinicians to interpret the same images can be more closely matched, and the disparity in imaging diagnosis between wealthy and impoverished communities can be lessened.

1.4.2.1. Inferior Alveolar Nerve

Inferior alveolar nerve (IAN) injury is a common adverse effect of implant surgery, molar extraction, and orthognathic surgery. The prognostic value of CBCT is higher than that of panoramic X-rays prior to surgery. Therefore, identifying and segmenting the IAN on CBCT images before surgery is crucial, primarily using CNNs. Both the studies conducted by Pierre et al. and Mu-Qing et al. have discovered CNN as highly accurate at detecting the association between the IAN and the third molar in addition to detecting the IAN itself [28, 29]. Although the automation of this process is time-saving and efficient, the accuracy remains only acceptable and requires improvement.

1.4.2.2. Tooth Segmentation and Endodontics

Many studies on the use of DL in dentistry have been centered on tooth segmentation, which can be classified into partial segmentation and global segmentation. Specifically, global segmentation approaches based on DL and CBCT can yield more detailed dental information than contemporary oral scans that simply display the position and axis of the crown without revealing the position of the root. Conversely, partial segmentation methods are used to help diagnose conditions related to the teeth, including pulpitis, periapical disease, and root fractures.

A method for automatically segmenting teeth based on CBCT imaging was published by Kang et al. They started by converting 3D images into 2D images, and then recording 2D regions of interest (ROIs). Ultimately, ROIs were used to segment the 3D teeth with 93.35% accuracy. Moreover, the detection of periapical pathosis on CBCT images was investigated using the CNN approach, achieving an accuracy of 92.8%. The data showed no difference between DL and manual segmentation [30]. Additionally, U-Net was able to identify unobturated MB2 canals on endodontically obturated maxillary molars and C-shaped root canals of the second molar [31].

1.4.2.3. TMJ and Sinus Disease

CBCT images can be segmented using U-Net to show the mandibular ramus and condyles. A web-based approach based on a neural network and shape variation analyzer can be used to classify temporomandibular joint osteoarthritis. Apart from osteoarthritis and condyle morphology, CBCT can demonstrate the joint space, effusion, and mandibular fossa, all of which can support a diagnosis of temporomandibular joint dysfunction syndrome [32]. Sinusitis has also been diagnosed using CNNs. Similar studies have been conducted by other investigators, who have segmented the sinus lesion, air, and bone using 3D U-Net. Still, there is room for improvement in the sinus lesion algorithm [33].

1.4.2.4. Dental Implant

Together, CBCT and DL can help with evaluation prior to surgery for issues related to the area of tooth loss, such as height, thickness, IAN placement, and alveolar bone density. They also provide postoperative stability analysis, which is convenient for examination later.

CNNs can be utilized to assist in the planning of the implant's initial location. A new end-to-end technology for CBCT image analysis only requires 0.001 seconds to perform. A multi-task CNN technique that can classify implant stability, extract zones of interest, and segment implants was presented by Liping et al. It evaluated each implant in 3.76 seconds and had an accuracy of above 92% [34].

1.4.2.5. Landmark Localization

In surgical navigation systems, accurate craniomaxillofacial landmark localization is essential for surgical precision. Traumatic defects and abnormalities pose challenges for DL in this field. However, DL techniques enable precise surgical planning, and despite its challenges, DL has been reported to perform well in CMF landmark localization. Neslisah et al. presented a three-step DL technique to segment anatomy and automate landmarks, producing excellent outcomes [35].

Orthodontic analysis is another application for this technique. Jonghun et al. were able to identify 23 landmarks and compute 13 parameters using a mask region-based convolutional neural network (R-CNN), which completed tasks equivalent to manual analysis in 30 seconds, whereas manual analysis took 30 minutes [36].

1.4.3. Cyst and Tumor Classification

A computer-aided classification system for cysts and tumors based on image textures on panoramic radiographs and CBCT has been developed. Multiple DL techniques, particularly CNN-based techniques, have been developed to identify and categorize lesions on panoramic radiographs and CBCT into tumors and cyst lesions. Using panoramic radiographs, Kwon et al. and Hyunwoo et al. detected and classified ameloblastoma and other cysts using the YOLO network, a deep CNN model for detection tasks [37]. The performance of the studied trials, including ML and DL models, revealed variability despite encouraging results. These findings were understandable as cystic and tumor lesions can exhibit similar radiographic signs and manifest in various manners, like shape, location, and internal structure differences. For use in clinical settings, AI models that identify and categorize tumor and cyst lesions must be further developed.

1.5. Periodontal Bone Loss

CNN has been applied to bone loss detection and periodontal condition categorization. A DL hybrid AI model was recently built by Hyuk-Joon et al. to diagnose periodontal disease and stage it in accordance with the 2017 World Workshop on the Classification of Periodontal and Peri-implant Illnesses and Conditions [38]. These studies have revealed promising results, with AI models delivering findings being on par with or even better than manual periodontal bone loss analysis.

1.6. Restorative Dentistry

AI's ability to detect caries is one of its most impressive applications in restorative dentistry. For instance, V. et al. demonstrated that ANNs could distinguish between dental caries and normal tooth anatomy using patient oral images, achieving 97.1% accuracy in identifying interproximal caries. However, no studies have shown AI's capability to predict caries severity [39].

Ragda et al. found that AI models could identify and differentiate between various dental restorations based on gray values on dental radiographs. Additionally, AI's use of sophisticated algorithms, like neural networks and decision tree models, has significantly improved the accuracy of dental shade matching [3, 40]. According to a review by Sthithika et al. [41], a decision tree regression AI model for specific dental ceramics achieved 99.7% accuracy. The type of technology and lighting conditions impact AI's shade-matching accuracy, enhancing the aesthetic results of dental restorations.

ANN has also been used to determine the shade, light-curing unit, and composite Vickers hardness ratio of bottom-to-top composites in an in vitro study [42] and assist in predicting the debonding probability of composite restorations [43]. A study created a CNN AI model to identify the crown tooth preparation finishing line, achieving an accuracy of 90.6% to 97.4% [44]. AI also helps predict post-operative sensitivity and dental restoration failure. A controversial study trained AI to predict post-operative sensitivity using data from 213 dentist questionnaires [45]. Despite promising results, the reliability of AI outcomes is influenced by the quality of the training data.

1.7. Periodontics

AI has advanced much in the field of periodontology recently. According to studies, AI systems are useful for assessing periodontal health and making medical diagnoses. For example, Nguyen et al. discovered that DL technology detects periodontally damaged teeth on periapical radiographs more reliably than ANN [46-48].

A study conducted by Gregory et al. [49] demonstrated that ML models could be trained to correlate data obtained from dental radiographs, clinical examinations, and patient medical histories in order to automatically diagnose the disease in the future. The capacity of this system to gauge the severity of periodontal disease is one of the most recent developments in AI in periodontology. ANN was shown to be able to differentiate between aggressive and chronic periodontitis in patients by evaluating immunologic parameters, with accuracy ranging from 90 to 98%, having statistical significance [50].

According to a recent systematic review study, AI applications can identify dental plaque with an accuracy of 73.6% to 99%. However, based on IOPAs, the accuracy of this technology in detecting periodontitis is reported to be between 74% and 78.20%. Dental implant design is optimized through the application of AI technology. Here, AI models adjust the porosity, length, and diameter of the dental implant, resulting in reduced stress at the implant-bone interface and enhanced dental implant design [51]. However, several studies conducted by Henggou et al., Seung-Ryong et al., and Chia-Hui et al. have reported that models can forecast the success and osteointegration of dental implants [52-54].

1.8. Prosthodontics

1.8.1. CAD/CAM

The digital imaging of prepared teeth and the milling of restorations utilizing ceramic blocks are made easier by computer-aided design and computer-aided manufacturing (CAD/CAM). They have reduced the possibility of human mistakes in the finished prosthesis while displacing the laborious and lengthy traditional casting procedure [55].

1.8.2. Fixed and Removable Prosthetics Supported by Teeth

When it comes to fixed prostheses, technologies can be used to analyze and suggest different treatment alternatives after the original tooth anatomy has been scanned. When designing a removable partial denture (RPD), a computing device should initially collect data linked to the intraoral situation, including the site of the teeth missing, the prosthetic state of the teeth existing, and occlusion [55].

One study showed a philosophy of existence (ontology) and case-based software for motorized programmed RPD designs, with 96% success for patients using RPDs. Another study adapted the model into a 2-dimensional format for fully computerized design, achieving 100% accuracy for the mandible and 75% for the maxilla [56]. AiDental software automates customized RPD generation, enhancing preclinical learning and student education [57].

1.8.3. Maxillofacial Prosthesis

Before the development of CAD/CAM, wax was used for carving to regenerate the facial form. The first step is to take X-ray, MRI, or CT scans, and then utilize computer software. The information is then transported to a rapid prototyping (RP) model. However, RP models cannot accurately follow skin curvature, so the wax cast is adapted and the final steps must be carved. CAD/CAM technology showed better results in the replacement of lost structures than traditional methods [58].

1.9. Oral Surgery

1.9.1. Tooth Impaction

A common pathological dental condition occurring due to local and systemic etiological factors, such as genetics, is tooth impaction. The range of these etiological factors affects 0.8% to 3.6% of the world population and the impact of third molars ranges from 16.7% to 68.6% [59, 60]. Infections, such as pericoronitis, periodontitis, orofacial discomfort, TMJ issues, fractures, cysts, and neoplasms, can arise from untreated tooth impaction. In most cases, impacted teeth need to be extracted, but not before other anatomical structures and root morphology are assessed [59, 61-63].

When it comes to dental surgeries, ANNs have proven their importance. It is emphasized that the application of AI technology has the potential to significantly transform orthognathic surgeries. For instance, it was observed that ANN models increased the accuracy of the outcome of orthognathic surgery which oral surgeons took into consideration in a study by Raphael et al. To achieve this goal, 30 pre-treatment facial images of patients undergoing orthognathic surgery were assessed by doctors, and anticipated post-surgery facial images were generated. The projected post-surgery outcomes were then altered using trained ANN models. AI intervention increased the accuracy by more than 80% by comparing the pre and post-AI-modified predicted facial images with the actual post-surgery facial images. Consequently, the use of AI in orthognathic surgery can have a significant impact on treatment planning and decision-making [64].

Due to nerve injury, paresthesia is a common side effect after third molar extraction. By using panoramic photos to locate the nerve, this can be prevented. These images are presently used by DL algorithms to forecast the risk of IAN injury. Based on the nerve's proximity to the mandibular third molar, they can precisely locate and forecast the possibility of nerve damage. According to reports, AI technologies can anticipate the likelihood of nerve injury after tooth extractions with 82% accuracy [65].

AI has helped to increase the success rate of treatment by accurately locating oral lesions on panoramic radiographs prior to surgery. In one study, AI exhibited 90.36% accuracy in identifying odontogenic keratocysts and ameloblastoma on dental images [66, 67].

1.9.2. Implantology

Dental implant therapies are improved when intraoral scanners and CBCT images are combined. With AI integration in implantology, several limitations on fixed and removable prostheses could be lifted, such as occlusal or interproximal adjustment errors, cementation mistakes, and positional faults. An AI model was proposed by Shriya et al. to lessen these inaccuracies, where AI was employed to help identify subgingival margins of abutments for implants using monolithic zirconia crowns. This technique reduced mistakes and time spent [55, 68].

1.10. Oral Medicine

AI has significantly advanced the detection and diagnosis of oral cancer. ANNs have shown 98.3% accuracy and 100% specificity using laser-induced autofluorescence, which is a non-invasive technology that distinguishes normal from premalignant tissue. CNNs have also excelled in recognizing cancerous and precancerous lesions through autofluorescence images. CNNs, used for hyperspectral image analysis, demonstrated the potential for unsupervised image-based classification and diagnosis of oral cancer. Deep neural networks (DNNs) predicted the development of oral cancer from potentially malignant lesions with 96% accuracy, surpassing other computer systems [69].

The gold standard for identifying oral cancer lesions remains histopathologic analysis, where AI has enhanced image efficacy and reduced errors. CNNs efficiently identified cancer stages by recognizing the keratinization layer. Additionally, segmentation methods detecting keratin pearls in patients were proposed [70]. AI also improved radiological interpretations, aiding clinicians in achieving accurate and effective diagnoses [71].

1.11. Pediatric Dentistry

1.11.1. Augmented Reality and Virtual Reality

Virtual reality (VR) is a computer-generated 3D simulation enhancing interaction with electronic devices and the real environment. In dentistry, the inclination toward VR has increased due to the demand for clinical practice and motor skills training, enabling trainees to practice and learn without the risk of endangering patients. This is particularly beneficial for pediatric patients who are often more hyperactive, requiring clinicians to be vigilant against sudden movements. Furthermore, VR aids pediatric care by providing self-distraction techniques through games and entertainment applications, diminishing anxiety and pain perception, which enhances the experience and encourages the youth to perform frequent dental visits. Augmented reality (AR) also enables parents to visually see intra-oral remarks made by dentists, improving patient education and compliance with children’s oral hygiene measures [72].

1.11.2. Pediatric Airway Management

SmartScope is an ML-based algorithm proposed by Clyde et al., which applies pediatric anesthesia airway management via endotracheal intubation. It recognizes the airway/tracheal anatomy and the position of the vocal cords, confirming the intubation using bronchoscopy and video-laryngoscopy. As stated in a study, the segmentation module output can be used as a tracheal GPS to enable the localization of the tracheal rings [73].

2. MATERIALS AND METHODS

This review, designated by IRB code IRB-COD-STD-19-JULY-2024, was formally approved on July 22, 2024.

2.1. Search Strategy

PubMed was searched for recent papers (from 2018 onwards) on “Artificial Intelligence and its Implications in Dentistry”. Additional related studies were explored via ResearchGate, Sci-Hub, and Crossref.

2.2. Eligibility Criteria

To be included in the systematic review, studies must meet the specified inclusion criteria: articles using AI models in various dental sub-specialties, such as endodontics, prosthodontics, periodontics, orthodontics, oral medicine, oral surgery, pediatric dentistry, and imaging. Exclusion criteria were case reports/series, letters to editors, inaccessible full-text articles, and older studies.

2.3. Study Selection

The research title, abstract, and keywords of the pertinent publications were reviewed by the investigators to determine their eligibility. Then, all possibly eligible papers’ full texts were retrieved and carefully reviewed to find research that matched all inclusion requirements. The risk of bias in the included studies was addressed by two reviewers independently assessing each study, ensuring unbiased evaluation. Any discrepancies were resolved through discussion or consultation with a third reviewer.

2.4. Data Extraction

Titles and abstracts of the chosen studies were independently evaluated by authors who screened the titles and selected the abstracts for full-text inclusion. The following categories of information were extracted: Author, year of publication, type of study, sample size, AI tool, data set used, AI usage (for diagnosis, treatment, or both), imaging modality, detailed usage (classify, detect, segment), performance, validation technique, and accuracy (Fig. 1).

3. RESULTS

This systematic review included articles from 2018-2024 where AI was implemented in various fields of dentistry. The primary AI tools utilized were ML and DL, with subcategories of DL, including CNNs and ANNs. Other branches, like fuzzy logic [26], deep geodesic learning [35], and VR [72] were also included. AI architectures, such as U-net and its branches [17], logistic regression, random forests, gradient boosting [18], YOLO [20], and Sparse Octree (S-Octree) [44], were mentioned, as displayed in Table 1.

Literature search process using the PRISMA search strategy.

| Author, Year/Refs. | Type of Study | AI Tool* | Imaging Modality+ | Usage Modality‡ | Application§ | Sample Size |

|---|---|---|---|---|---|---|

| Endodontics | ||||||

| Kruse C (2019) [9] | Ex vivo histopathological study | N/A | CBCT | Diagnosis | Assessment of apical periodontitis | 223 teeth with 340 roots |

| Pauwels (2021) [10] | Comparison study | DL | IOPA | Diagnosis | Detection of periapical lesions | 10 sockets |

| Qiao X (2020) [13] | Prospective study | DL | N/A | Treatment | Detection of the correct length of the root canal | 21 extracted teeth |

| Fukuda M (2019) [14] | Evaluation study | DL | OPG | Diagnosis | Detection of vertical root fractures (VRF) | 330 VRF teeth |

| Sherwood AA (2021) [17] | Evaluation study | DL | CBCT | Diagnosis | Segment and classify C-shaped canal morphologies | 135 CBCT images |

| Herbst CS (2022) [18] | Retrospective longitudinal study | ML | IOPA | Diagnosis | Association analysis between various factors and treatment failure | 591 permanent teeth |

| Li Y (2022) [19] | Prospective study | DL | IOPA | Diagnosis and treatment evaluation | Automated evaluation of root canal therapy | 245 root canal treated X-ray images |

| Orthodontics | ||||||

| Talaat S (2021) [20] | Retrospective study | DL | N/A | Diagnosis and treatment plan | intraoral images for the detection of malocclusions | intraoral images of 700 subjects |

| Kazimierczak N (2024) [21] | A comprehensive review | DL, ML | Lateral cephalogram | Diagnosis and treatment plan | Detecting skeletal age, patient monitoring, establishing a treatment plan | 139 articles |

| Imaging | ||||||

| Tuzoff (2019) [23] | Retrospective study | DL | OPG | Diagnosis: teeth detection and numbering | Classifies detected teeth images according to the FDI notation | 1574 images |

| Hung (2020) [25] | Systematic review | DL, ML | IOPA, OPG, CBCT, cephalometrics, MRI | Diagnosis of multiple dental and maxillofacial diseases | Localization of landmarks, classification/segmentation of maxillofacial cysts and/or tumors, identification of periodontitis/periapical disease | 50 electronic search titles |

| Carrillo-Perez (2022) [26] | Systematic review | DL, ML | IOPA, OPG, CBCT | Diagnosis and treatment planning of multiple dental procedures | Disease identification, image segmentation, image correction, and biomimetic color analysis and modeling | 120 eligible papers |

| Abdou MA (2022) [27] | Systematic review | DL, ML | IOPA, OPG, CBCT, CT, MRI, Ultrasound, PET, Fluorescence Angiography, and even photographic images | Diagnosis and treatment planning using CAD systems | Classification, detection, localization, segmentation, and automatic diagnosis | 105 articles |

| Liu MQ (2022) [29] | Retrospective study | DL | CBCT | Diagnosis: automatic detection of the mandibular third molar (M3) to the mandibular canal (MC) | Training, detection, segmentation, classification, and validation, testing of lower M3 |

254 CBCT scans |

| de Dumast (2018) [32] | Prospective study | DL | CBCT | Diagnosis: classification of temporomandibular joint osteoarthritis | Classification: web-based system for deep neural network classifier of 3D condylar morphology, computation and integration of high dimensional imaging, clinical, and biological data | Training dataset consisted of 259 condyles |

| Huang (2022) [24] | Retrospective study | DL | CBCT | Diagnosis: assessment and classification of implant stability | Training and testing of image processing algorithm for implant denture segmentation, volume of interest (VOI) extraction, and implant stability classification | 779 implant coronal section images |

| Torosdagli (2019) [35] | Retrospective study | DL | CBCT | Diagnosis | Segmentation and anatomical landmarking | 250 images |

| Ahn (2022) [36] | Retrospective study | DL | CBCT | Diagnosis and treatment planning: analysis of facial profile processing in relation to CBCT images for diagnosis and treatment planning of orthodontic patients | Detection of 23 landmarks, partitioning, detecting regions of interest, and extracting the facial profile in automated measurements of 13 geometric parameters from CBCT images taken in natural head position | 30 CBCTs with multiple views |

| Yang (2020) [37] | Retrospective study | DL | OPG | Diagnosis: Automated Detection of Cyst and Tumors of the Jaw in Panoramic Radiographs | Detection, classification, and labeling of images into 4 categories: dentigerous cysts, odontogenic keratocyst, ameloblastoma, and no cyst | 1602 lesions on panoramic radiographs |

| Chang (2020) [38] | Retrospective study | DL | OPG | Diagnosis and prognosis: automatic method for diagnosing periodontal bone loss and staging periodontitis of each individual tooth | Detection of bone loss and conventional CAD processing for classification used for periodontitis staging according to the new 2017 criteria classification | 518 images |

| Restorative dentistry | ||||||

| Shetty S (2023) [41] | Systematic review | ML, DL | N/A | Diagnosis | Utilizes AI for enhancing the precision and consistency of dental shade selection in restorative and cosmetic dentistry. | 15 articles selected for review |

| Deniz Arısu H (2018) [42] | Experimental study | DL | N/A | Examination of material properties | Analysis of the effect of different light curing units and composite parameters on the hardness of dental composites. | 60 specimens |

| Yamaguchi S (2019) [43] | Prospective study | DL | 3D Stereolithography models from a 3D oral scanner | Diagnosis | Predict debonding of CAD/CAM composite resin crowns using 2D images from 3D models | 24 cases with a total of 8640 images |

| Bei Zhang (2019) [44] | Prospective study | DL | 3D dental model data | Diagnosis and treatment | Classification, detection, segmentation of tooth preparation margin line | 380 oral patients' dental preparation models |

| Marta ct Revilla-León et al. (2022) [45] | Systematic review | ML, DL | IOPA, near-infrared transillumination techniques, CBCT, | Diagnosis and treatment | Diagnosing dental caries, vertical tooth fractures; detecting tooth preparation margins; predicting restoration failure | 34 articles analyzed |

| Periodontics | ||||||

| Sachdeva S (2021) [46] |

Systematic review | DL | Radiographs, CAD/CAM | Diagnosis, treatment planning | Aids in screening, diagnosis, and treatment planning of periodontal diseases by early detection | 25 articles included |

| Nguyen TT (2021) [47] |

Systematic review | ML, DL | Radiographs, CBCT, CAD/CAM | Diagnosis and treatment planning | Directs treatment to make informed decisions in dentistry | 32 articles included |

| Reddy MS (2019) [48] |

Retrospective study | ML | OPG | Diagnosis | Aid in the diagnostics in medical and dental fields | 2482 panoramic radiographs |

| Yauney G (2019) [49] |

Prospective study | ML | IOPA | Diagnosis | Correlate information derived from clinical examination, dental radiographs, and patient's medical history to diagnose the disease automatically in the future | 1215 intraoral fluorescent images |

| Revilla-León M (2023) [50] |

Systemic review | ML, DL | Intraoral clinical images, IOPA’s, OPG’s, bitewing | Diagnosis | Detecting dental plaque, diagnosis of gingivitis, diagnosis of periodontal disease from intraoral images, and diagnosis of alveolar bone loss from periapical, bitewing, and panoramic radiographs | 24 articles were included |

| Prosthodontics | ||||||

| Singi SR (2022) [55] | Systematic review | DL | CAD/CAM | Diagnosis and treatment planning utilizing CAD/CAM in prosthesis fabrication | Application in fixed and removable prosthesis along with maxillofacial prosthesis to reduce errors | 380 dental preparations |

| Ali IE (2023) [56] | Literature review | DL | CAD/CAM | Diagnosis and treatment planning in prosthodontic workflow | Collecting information of the patient oral condition to enhance the prosthetic treatment | 15 articles |

| Mahrous A (2023) [57] | Comparative study | ML | N/A | Treatment planning and aiding in removable prosthesis study design | An app that aids in treatment planning and the design of the RPD | 73 RPD designs |

| Oral surgery | ||||||

| Patcas (2019) [64] | Prospective | ML, DL | Extraoral and intraoral pictures | Treatment plan and prognosis | Score the patient's age appearance and facial attractiveness based on pre- and post-orthodontic treatment photographs | 2164 Pre- and post-treatment photographs |

| KIM bs (2021) [65] | Preliminary study | DL | OPG | Treatment planning | Determine whether CNNs can predict paresthesia of the IAN using panoramic radiographic images before extraction of the mandibular third molar | 300 preoperative OPGs |

| Liu Z (2021) [66] | Retrospective study | ML, DL | OPG | Diagnosing and treatment planning for surgical procedures | CNN algorithm that significantly improves the classification accuracy | 420 OPGs |

| Ghods K (2023) [40] | Systematic review | ML, DL | Intraoral clinical images, IOPA’s, OPG’s, bitewing | Diagnosis, treatment planning | Detection of dental abnormalities and oral malignancies based on radiographic view and histopathological features | 116 articles included |

| Mahmoud H (2021) [67] | Systematic review | ML | Intraoral clinical images, IOPA’s, OPG’s, bitewing | Diagnosis, treatment planning | Methods for diagnostic evaluation of head and neck cancers using automated image analysis. | 32 articles were included |

| Patel P (2020) [59] |

Prospective | ML, DL | OPG, CBCT | Diagnosis and treatment planning | Determining the specific signs of close relationship between impacted mandibular third molar | 120 individuals |

| Oral medicine | ||||||

| Patil S (2022) [69] | Systematic review | DL | CT | Diagnosis of oral cancer | Detection of precancerous and cancerous lesions | 9 articles |

| Al-Rawi N (2022) [70] | Systematic review | DL, ML | N/A | Diagnosis and detection of oral cancer | Uses AI in histopathologic images for the diagnosis and detection of oral cancer. | 17 studies with a total of 7245 patients and 69,425 images |

| Moosa Y (2023) [71] | Survey research | ML | N/A | Diagnosis and treatment planning | AI detection and collection of patient data, medical history, imaging and clinical features | 200 dentists answered questionnaire |

| Pediatric dentistry | ||||||

| Barros (2023) [72] | Systematic review | VR | N/A | Treatment plan: anxiety reduction among children | Behavior management | 22 randomized control trials included |

| Matava (2020) [73] | Systematic review | ML, DL | N/A | Diagnosis Treatment planning: pediatric anesthesia airway management Prognosis: predicting outcomes during pediatric airway management |

Diagnosis, monitoring, procedure assistance, and predicting outcomes during pediatric airway manage | 27 articles |

| Author, Year\Refs | Dataset Used | Performance | Validation | Accuracy of the Results |

|---|---|---|---|---|

| Orhan (2020) [30] | 153 CBCTs with periapical lesions obtained from 109 patients | Only one tooth was incorrectly identified/numbered 142 of a total of 153 periapical lesions were detected |

92.8% reliability | No significant difference between manual and CNN measurement methods (p > 0.05) |

| Serindere (2022) [33] | 148 healthy and 148 inflamed sinus images | Diagnosis of sinusitis in OPGs was moderately high, whereas it was clearly higher with CBCT images, being recognoized as the “gold standard” for diagnosis | By average accuracy, sensitivity, and specificity of the CNN model | - |

Of the 44 studies presented in Table 1, 15 were systematic reviews, 11 retrospective studies, 8 prospective studies, 2 evaluation studies, 2 comparative studies, 1 survey research, 1 preliminary study, 1 experimental study, and 1 ex vivo histopathological study.

As displayed in Table 1, various imaging modalities were used, including IOPA, orthopantomogram (OPG), bitewing, CBCT, cephalometrics, magnetic resonance imaging (MRI), CT, ultrasound, positron emission tomography (PET), fluorescence angiography, 3D stereolithography models, near-infrared transillumination techniques, CAD/CAM, and intraoral/extraoral photographs. One study by Xiaoyue et al. focused on an impedance method instead of traditional imaging [13].

AI tools aimed at diagnosis, treatment planning, or both were evaluated. Nineteen studies used AI for diagnosis alone, 3 for treatment alone, and 18 for both diagnosis and treatment. AI was used for detection in 15 studies, segmentation in 7 studies, and classification in 4 studies. 3 studies utilized AI for detection, classification, and segmentation.

Sample sizes varied across the studies. The largest sample size was 7245 patients for detecting and diagnosing oral cancer using histopathologic analysis [70]. Ruben et al. used the smallest sample size of 10 sockets in bovine ribs to identify periapical lesions [10]. Samples included teeth, roots, sockets, X-ray pictures, publications, intraoral images, CBCT scans, preparation models, lateral cephalograms, and OPGs. Three studies used patients as samples, four used teeth, three used CBCT scans, and four used OPGs.

As per Table 2, 449 different sinus and periapical images were obtained from a total of 405 patients. The performance of AI tools for both was efficient, with only one incorrectly identified tooth in Kaan et al.’s study with 92.8% reliability, and CBCT being recognized as the gold standard in Serindere et al.’s study with high accuracy, sensitivity, and specificity.

4. DISCUSSION

The main aim of this study was to evaluate whether AI could be applied in dental clinical practice to enhance diagnoses, patient care, and treatment outcomes. AI has been successfully employed in multiple fields of dentistry, but it is essential to delve further into its uses and outcomes to evaluate its continuous reliability in dentistry.

In endodontics, Ruben et al. constructed a DL model with high sensitivity (0.87), specificity (0.98), and ROC-AUC (0.93) [10]. Vo et al. generated another tool with similar diagnostic accuracy [11]. Maria et al. unveiled an AI solution with a superior accuracy of 70% and a specificity of 92.39%, surpassing the performance of some human experts in the field [12].

Detecting VRF can be challenging for dentists. Ziyang et al. suggested that the AI ResNet50 displayed the highest accuracy and sensitivity for VRF diagnosis [15]. Hina et al. proposed a similar model with a mean ROC of 0.97 in detecting cracked teeth [16].

In orthodontics, CNNs have been found to be successful in 3D cephalometric landmark identification. Sameh et al. utilized intraoral pictures with the YOLO technique, an AI model detecting malocclusions, such as overjet, overbite, crossbite, crowding, and spacing with an exceptional accuracy rate of 99.99% [20].

The use of AI in imaging has flourished in previous years. From identifying dental anatomy [23] to detecting caries [24], CNN models have proven accuracy as high as 95.8-99.45% and 75.5-93.3%, respectively [23, 24]. CBCT imaging is the most applicable imaging module in AI. One of the most significant CNN frameworks is U-Net, which is employed in image segmentation to identify landmarks, anatomy, and pathologies [31].

Pierre et al. [28] and Mu-Qing et al. [29] found that CNNs can accurately detect the association between the IAN and the third molar, as well as the IAN itself on CBCT images. Although CNNs facilitate this process, the accuracy remains only acceptable and requires improvement.

Kang et al. proposed a technique to detect ROIs from CBCT images to segment precise 3D teeth with a 93.35% accuracy, allowing the identification of various dental diseases within or around the tooth structure [30]. U-Net identified unobturated MB2 canals on endodontically obturated maxillary molars and C-shaped root canals of the second molar [31]. Pulp segmentation was also achievable through the same procedure.

CBCT could also be utilized for dental implant placement. A new CNN technology was able to plan an implant's initial location within 0.001 seconds. Liping et al. found that it could classify implant stability, extract zones of interest, and segment implants, each within 3.76 seconds and with an accuracy of above 92% [34].

A DL technique presented by Neslisah et al. was able to segment anatomy and automate landmarks through 3 steps, specifically benefitting orthodontics [35]. In agreement, Jonghun et al. utilized mask R-CNN to identify 23 landmarks, completing tasks equivalent to that of manual analysis in 30 seconds, whereas manual analysis took 30 minutes [36].

AI in oral surgery uses similar principles for CBCT to relate the position of the tooth to be extracted or the lesion to be removed to other important anatomical structures, like the IAN or maxillary sinus, reducing complications to a minimum [28, 29].

Kwon et al. and Hyunwoo et al. showed promising results with YOLO, a DL model aiding in cyst and tumor classification. This tool detected and classified ameloblastomas [37] and bone-related diseases, like periapical cysts, dentigerous cysts, keratocystic odontogenic tumors (KCOT), bone fractures, jaw abnormalities, and even bone cancers, with an overall accuracy close to 80% [74-77]. Despite encouraging results, these models revealed variability due to the different radiologic features of pathologies, such as shape, location, and internal structure differences. Consequently, CNNs for cyst and tumor classification need further development before being employed in dental practice.

AI's role in imaging also includes bone loss detection and periodontal condition categorization. Hyuk-Joon et al. developed a DL model to diagnose and stage periodontal disease, proving to be equivalent, if not superior, to manual detection and diagnosis. Clinicians may overlook periodontal bone loss on panoramic imaging, where computer-assisted diagnosis has proven efficient in early detection and intervention [38].

In restorative dentistry, AI has shown promise. V. et al. proposed an ANN-based technology for oral images to identify caries, which can be revolutionary for growing dentists to aid in interproximal caries diagnosis [39]. However, no algorithm exists yet for caries severity detection. In agreement, Ragda et al. concluded that AI could also differentiate dental restorations based on extent, distribution, and gray values on dental radiographs [40].

In prosthodontics, AI tools assist in designing prostheses, especially RPDs, where dentists struggle to select the best therapy for patients. AI optimizes given information, like the amount and location of lost teeth, occlusion, and the state of remaining teeth, to aid in patient-specific treatment planning. AiDental software auto-generates customized RPDs, highly implemented in dental education systems [57]. Maxillofacial prostheses design and fabrication with CAD/CAM systems is also possible.

A case-based software for pre-existing programmed RPD designs displayed 96% accuracy, indicating its potential for patients using RPDs. In disagreement, another study modifying the documented RPD design into a 2D form achieved 100% accuracy for the mandible and 75% for the maxilla [56].

In oral medicine, ANN-based systems using hyperspectral images or laser-induced autofluorescence, a non-invasive diagnostic technique that uses laser light to detect abnormal tissue changes, differentiated between normal and premalignant tissues, exhibiting 98.3% accuracy and 100% specificity. Yet, their performance for the diagnosis was not significant. Prediction of lesion development and prognostic features was achieved using DNNs with a high accuracy rate of 96% [69].

CNNs also aid in histopathologic analysis to stage cancer through the level of keratinization or detecting keratin pearls via image segmentation [70].

In pediatrics, both Ta-Ko et al. [74] and Barros et al. [72] agree that VR serves as a major aiding tool. Distraction techniques ensure workflow efficiency by diverting children's attention from the otherwise “scary” procedure. In agreement, Osama et al. confirmed this through their randomized clinical trial [75].

Additionally, SmartScope, an AI-based technology proposed by Clyde et al., assists in pediatric airway management and endotracheal intubation. Segmentation of tracheal anatomy and vocal cords via a GPS localizes the tracheal rings for intubation using bronchoscopy and video-laryngoscopy. This is especially useful as pediatric airways differ in shape and size from adult airways [73].

The limitations of this review include geographical variability in study selection, reliance on a limited number of databases, and a focus on articles published only from 2018 onwards, which may not capture foundational research. Additionally, the inclusion criteria emphasized AI applications in specific dental fields, potentially overlooking emerging areas. The variation in sample sizes across studies may have further impacted the generalizability and reliability of the findings.

The implications of this study highlight the transformative potential of AI in enhancing diagnostic accuracy, treatment planning, and clinical decision-making across various dental specialties. As AI technologies continue to evolve, they are poised to complement human expertise, streamline workflows, and potentially improve access to high-quality dental care globally. However, further research is needed to address data privacy concerns and validate AI models in diverse clinical settings. A comprehensive evaluation is needed before making decisions to ensure patient well-being and maximize success rates [8, 78].

CONCLUSION

AI has the potential to improve patient outcomes, preventive care, workflow efficiency, dental disease treatment, and diagnosis accuracy. Treatment planning may be enhanced by its capacity for pattern recognition and data analysis. The complexity of dental cases, the requirement for a variety of excellent datasets, and uncertainties regarding the interpretability and dependability of AI algorithms are obstacles, though. AI has the potential to enhance dental specialists' knowledge and enhance patient care in contemporary dentistry through continued research, interdisciplinary collaboration, and ethical considerations.

AUTHORS’ CONTRIBUTION

M.M.M.M.: Study conception and design; M.B.D.: Data collection; H.A.: Data analysis or interpretation; Y.A.: Writing of the paper; S.A.A.F.A.B.: Writing, review, and editing. All authors have reviewed the results and approved the final version of the manuscript.

LIST OF ABBREVIATIONS

| AI | Artificial intelligence |

| ML | Machine learning |

| CNNs | Convolutional neural networks |

| DL | Deep learning |

| CAD/CAM | Computer-aided design and computer-aided manufacturing |

| ANNs | Artificial neural networks |

| DNNs | Deep neural networks |

| CBCT | Cone-beam computed tomography |

| VRF | Vertical root fracture |

| YOLO | You only look once technique |

| DM | Dental monitoring |

| IAN | Inferior alveolar nerve |

| CT | Computed tomography |

| KCOT | Keratocystic odontogenic tumors |

| ROI | Regions of interest |

| R-CNN | Region-based convolutional neural network |

| RPD | Removable partial denture |

| MRI | Magnetic resonance imaging |

| RP | Rapid pro typing |

| AR | Augmented reality |

| VR | Virtual reality |

| IOPA | Intra-oral periapical radiograph |

| PET | Positron emission tomography |

| OPG | Orthopantomogram |

| ROC-AUC | Receiver operating characteristics-area under the curve |

| PET | Positron emission tomography |

AVAILABILITY OF DATA AND MATERIALS

The data and supporting information are provided within the article.

ACKNOWLEDGEMENTS

Declared none.